High-Fidelity Machine Learning Interatomic Potentials with Multi-Fidelity Training

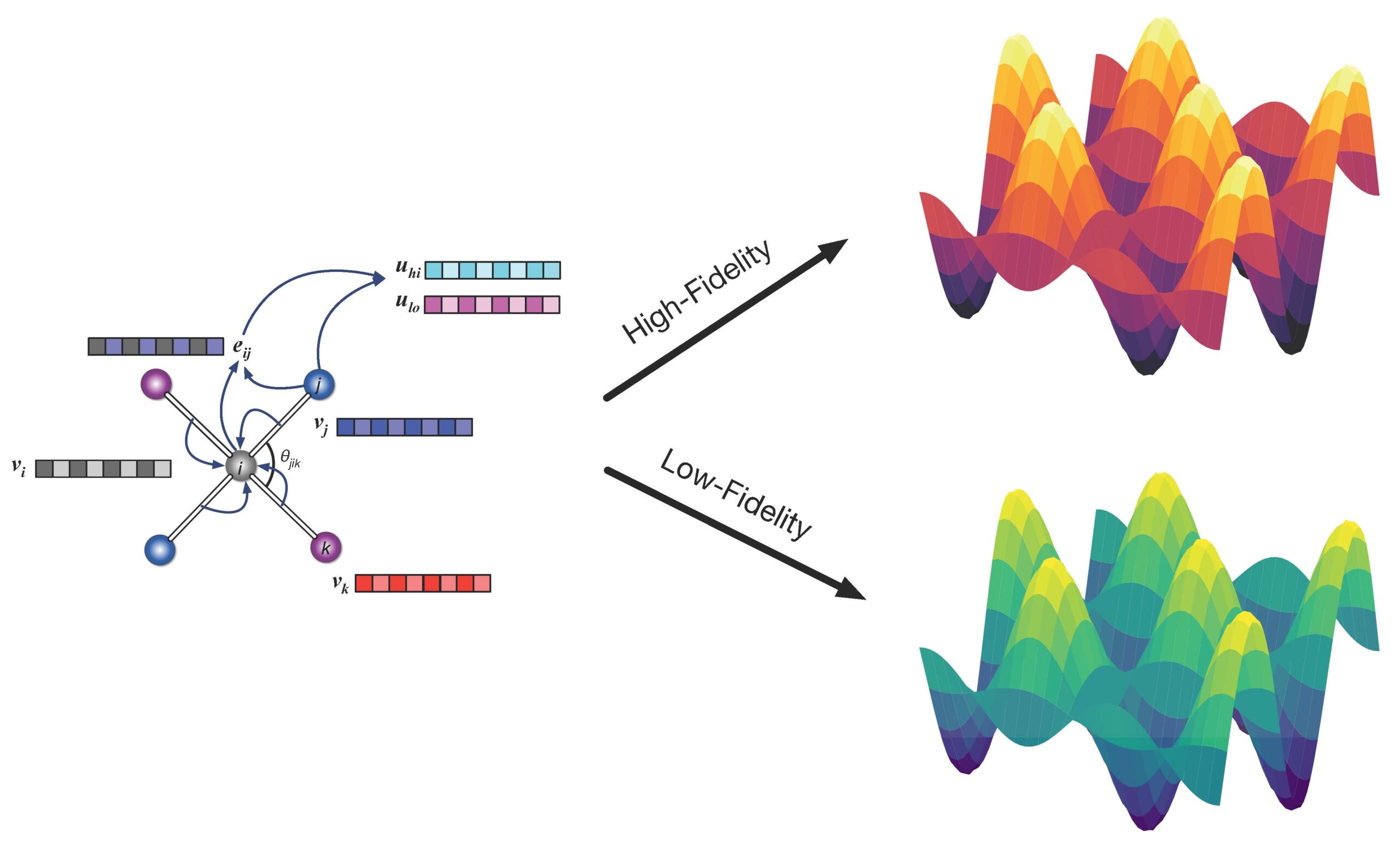

Machine learning interatomic potentials (MLIPs) are powerful tools for atomistic simulations, but training them with high-fidelity quantum mechanical data is costly. Most MLIPs rely on low-cost PBE calculations, while more accurate SCAN functionals are computationally expensive. In this work, Tsz Wai Ko introduces a multi-fidelity M3GNet approach that can achieve SCAN-level accuracy with 10% SCAN data, reducing computational costs significantly. Key Findings Silicon: Captures phase transitions & structural properties with high accuracy. Water: Predicts liquid & ice structures better than single-fidelity models. Efficiency: Cuts high-fidelity data requirements by up to 90% while improving accuracy. Why It Matters ✔️ Faster, cheaper MLPs for materials simulations. ✔️ Better generalization to unseen materials. ✔️ A pathway to universal, high-fidelity interatomic potentials. Read the full paper here.