Unlocking Superionic Sodium Transport and Synthesis with MLIPs

Check out the work here.

Creating It from Bit

Creating It from Bit

Check out the work here.

Read the full paper here: ACS Nano Letters – Superionic Surface Li-Ion Transport in Carbonaceous Materials

Graph deep learning is transforming materials science by enabling accurate, scalable, and efficient predictions of material properties and potential energy surfaces (PES). Our article on “Materials Graph Library (MatGL) — an open-source, modular framework purpose-built for materials science and chemistry” has been published in npj Computational Materials. MatGL started as a collaboration between Intel Labs and the Materials Virtual Lab to provide a “batteries-included” environment for:

We show that MatGL’s models achieving state-of-the-art accuracy on widely used datasets (QM9, Matbench, ANI-1x, MPF, MatPES) while maintaining competitive computational efficiency. The library’s design also supports fine-tuning, enabling rapid adaptation to new materials systems.

Read the full paper here and explore MatGL on GitHub.

Machine learning interatomic potentials (MLIPs) are powerful tools for atomistic simulations, but training them with high-fidelity quantum mechanical data is costly. Most MLIPs rely on low-cost PBE calculations, while more accurate SCAN functionals are computationally expensive.

In this work, Tsz Wai Ko introduces a multi-fidelity M3GNet approach that can achieve SCAN-level accuracy with 10% SCAN data, reducing computational costs significantly.

Key Findings

Silicon: Captures phase transitions & structural properties with high accuracy.

Water: Predicts liquid & ice structures better than single-fidelity models.

Efficiency: Cuts high-fidelity data requirements by up to 90% while improving accuracy.

Why It Matters

✔️ Faster, cheaper MLPs for materials simulations.

✔️ Better generalization to unseen materials.

✔️ A pathway to universal, high-fidelity interatomic potentials.

Read the full paper here.

In 2020, Prof Ping Liu’s group and our group proposed a highly promising anode material – disordered rock salt (DRS) Li3V2O5 (LVO) – which had a near-ideal voltage of 0.6V vs Li/Li+ and fast Li diffusion. In this work, we show that we can further increase the energy density of this anode by Mg doping. Due to the increase in site energy due to Mg doping, the voltage of the LVO anode is reduced by a further 10%, while still retaining low Li migration barriers. Mg-doped LVO retains over 95% of its capacity over 1000 cycles at a rate of 5 C. Full cells with a LiNi0.8Co0.1Mn0.1O2 cathode demonstrate the expected increase in cell voltage and energy density while retaining 91% of their capacity over 250 cycles at 5 C.

Check out the work here.

Xingyu’s collaborative research with the Chen group, titled “Proton-Exchange Induced Reactivity in Layered Oxides for Lithium-Ion Batteries,” has been published in Nature Communications!

This study addresses a critical challenge in the manufacturing, storage, transportation, processing, and recycling of LiNixCoyMn1-x-yO2 (NCM, 0 < x, y < 1), the dominant cathode material for state-of-the-art lithium-ion batteries. Through an integration of experimental and computational approaches, the team reveals how protons intercalate into the layered NCM structure, triggering Li⁺ leaching and the formation of protonated NCM. This protonation process significantly disrupts the structural integrity of the material, promoting cation rearrangement and the development of impurity phases. These effects are particularly severe in NCMs with higher nickel content.

The study also demonstrates a solution-based approach to mitigate Li deficiencies in NCM materials by leveraging controlled proton concentrations and the presence of Li⁺ ions. The underlying relithiation mechanism is further elucidated through detailed materials characterization and kinetics modeling. This work provides essential insights into managing structural and compositional defects in NCM cathodes, paving the way for improved performance and stability in next-generation lithium-ion batteries.

Check out this work here.

Congratulations to Zishen for his co-authored paper on “Influence of Interlayer Cation Ordering on Na Transport in P2-Type Na0.67–xLiy Ni0.33–zMn0.67+zO2 for Sodium-Ion Batteries” published in JACS together with the group of Prof Claire Xiong at Boise State University! In this work, we studied the P2-type Na2/3Ni1/3Mn2/3O2 (PNNMO) cathode for Na-ion batteries. Zishen’s contribution is showing via DFT calculations that Li doping (Na2/3Li0.05Ni1/3Mn2/3O2, LFN5) promotes ABC-type interplanar Ni/ Mn ordering without disrupting the Na+/vacancy ordering and creates low-energy Li−Mn-coordinated diffusion pathways. These result are in line with those from neutron/X-ray diffraction. Quasielastic neutron scattering reveals that the Na+ diffusivity in LFN5 is enhanced by an order of magnitude over PNNMO, increasing its capacity at a high current. These results suggest that the interlayer ordering can be tuned through the control of composition, which has an equal or greater impact on Na+ diffusion than the Na+/vacancy ordering.

Check out the work here.

Congratulations to Ji Qi for successfully defending his PhD thesis on Apr 12 2024. During his time in the Materials Virtual Lab, Ji has made extremely valuable contributions in the development and application of machine learning interatomic potentials (MLPs). He has applied MLPs to solid electrolytes, pushing the envelope of their application to extremely complex chemistries (7 element oxides!!!). He also developed an innovative DIRECT sampling method that enables the fitting of MLPs with much fewer / zero active learning steps. We wish him all the best in his new job at CATL.

Check out the recording of his PhD thesis defense below.

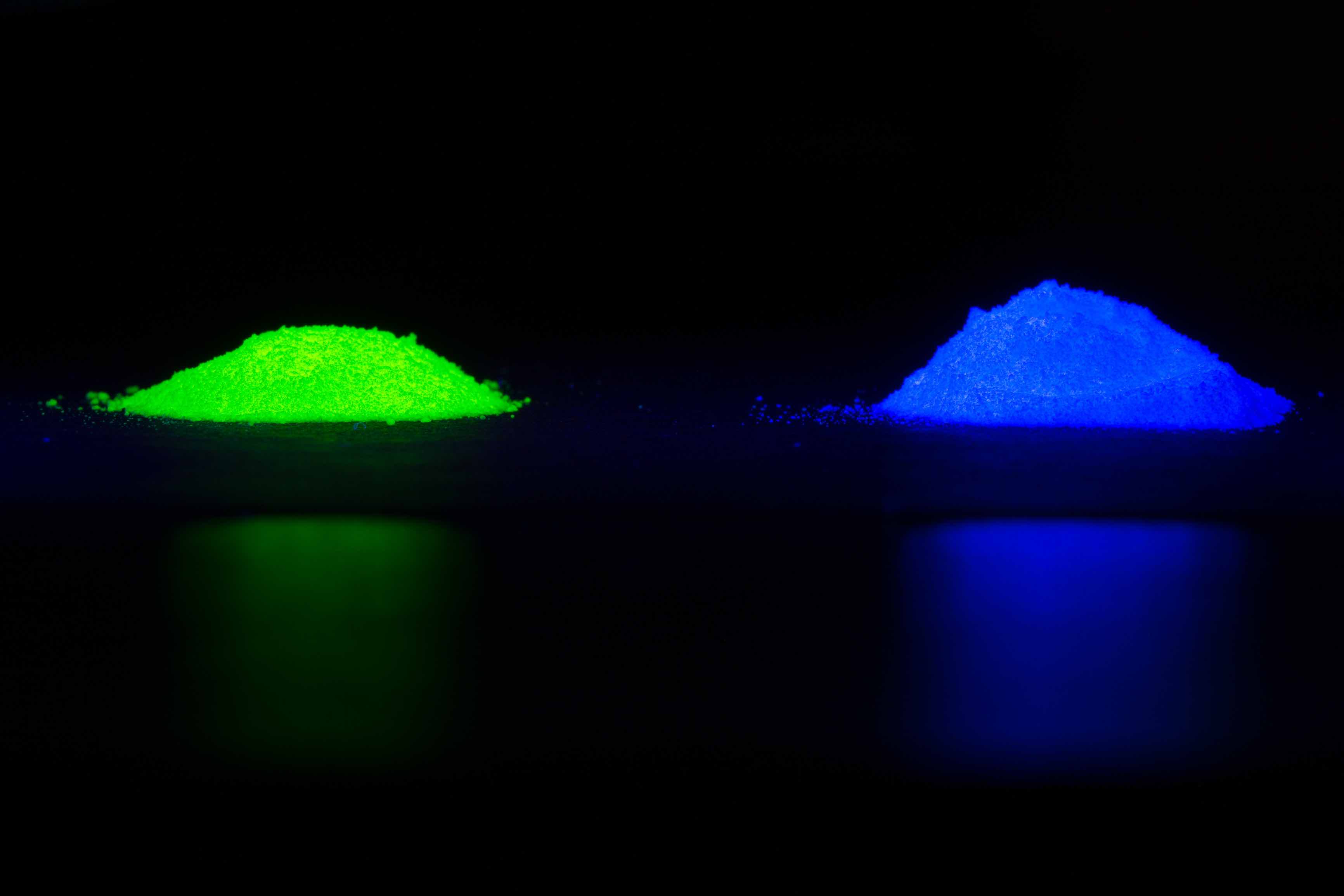

Manas’ final work on “Healable and conductive sulfur iodide for solid-state Li–S batteries” is now out in Nature! This work is a collaboration between Prof Ping Liu’s group and our group. Solid-state Li–S batteries (SSLSBs) are made of low-cost and abundant materials free of supply chain concerns. In this work, we report an S9.3I molecular crystal, which shows a semiconductor-level electrical conductivity. Our group’s main contribution is showing that iodine disrupts the molecular bonding in sulfur to lower its melting point, as well as introduce new states into the band gap of sulfur. This lowered melting point enables periodical remelting of the cathode to repair interfaces.

Check out this work here as well as the UCSD press release on this discovery.

Ji’s work on “Robust training of machine learning interatomic potentials with dimensionality reduction and stratified sampling” is now out in npj Computational Materials! Machine learning interatomic potentials (MLIPs) enable accurate simulations of materials at scales beyond that accessible by ab initio methods. In this work, we present DImensionality-Reduced Encoded Clusters with sTratified (DIRECT) sampling as an approach to select a robust training set of structures from a large and complex configuration space. By applying DIRECT sampling on the Materials Project relaxation trajectories dataset with over one million structures and 89 elements, we develop an improved materials 3-body graph network (M3GNet) universal potential that extrapolates more reliably to unseen structures. We further show that molecular dynamics (MD) simulations with the M3GNet universal potential can be used instead of expensive ab initio MD to rapidly create a large configuration space for target systems. We combined this scheme with DIRECT sampling to develop a reliable moment tensor potential for titanium hydrides without the need for iterative augmentation of training structures.

Check out this work here. If you want to use DIRECT sampling for your work, please check out our implementation available on our MAML repository on Github.